Data Error Analysis Brief

Prepared by [YOUR NAME] for [YOUR COMPANY NAME]

Executive Summary

The "Data Error Analysis Brief" offers a comprehensive examination of the challenges posed by data errors in today's data-driven landscape. From their various forms and underlying causes to their far-reaching impacts on decision-making and organizational outcomes, this brief sheds light on the pervasive nature of data errors. By emphasizing proactive measures, robust validation protocols, and a culture of data integrity, it equips organizations with the knowledge and tools necessary to effectively identify, address, and prevent errors in their datasets. Through collaborative efforts and a commitment to continuous improvement, stakeholders can navigate the complexities of data errors with confidence, ensuring that their data remains a reliable foundation for informed decision-making and sustainable growth.

Data Collection and Validation

Data collection forms the bedrock of any analytical endeavor, but the integrity of insights derived from this data is only as strong as the accuracy and reliability of the underlying information. Hence, robust data collection and validation processes are imperative to ensure the fidelity of analyses and decisions based on them. This section explores the pivotal role of data collection and validation in mitigating errors and enhancing data quality.

Data Collection:

Effective data collection begins with a clear understanding of the objectives, variables, and stakeholders involved. Whether sourced from internal systems, third-party providers, or manual inputs, data collection methodologies must be meticulously designed to capture relevant information while minimizing errors. Automated data capture tools, such as sensors, APIs, and web scraping scripts, offer efficiency and consistency, but human intervention remains indispensable for contextual understanding and error detection.

Key considerations in data collection include:

Sampling Strategy: Ensuring representativeness and minimizing bias through a random, stratified, or systematic sampling approach

Data Consistency: Standardizing data formats, units, and conventions to facilitate comparability and compatibility across sources.

Data Governance: Establishing clear protocols for data ownership, access rights, and usage permissions to uphold confidentiality and compliance.

Data Documentation: Maintaining comprehensive metadata, data dictionaries, and lineage records to track data provenance and facilitate traceability.

Validation Techniques:

Once collected, data undergoes rigorous validation processes to identify and rectify errors, anomalies, and inconsistencies. Validation encompasses a spectrum of techniques, ranging from basic sanity checks to sophisticated statistical analyses, each tailored to the nature and complexity of the data under scrutiny. Some common validation techniques include:

Field-level Validation: Verifying data completeness, format adherence, and range constraints at the individual attribute level.

Cross-field Validation: Detecting logical inconsistencies and dependencies between related data fields, such as age and date of birth.

Data Profiling: Exploratory analysis to identify patterns, distributions, and outliers within the dataset.

Statistical Analysis: Leveraging descriptive and inferential statistics to assess data quality, distributional assumptions, and correlation structures.

Machine Learning Models: Training predictive models to flag anomalous data points or predict missing values based on existing patterns.

Best Practices:

To enhance the effectiveness of data collection and validation efforts, organizations should adopt the following best practices:

Continuous Monitoring: Implementing real-time monitoring systems to detect data anomalies and drifts as they occur.

Iterative Improvement: Soliciting feedback from data consumers and stakeholders to iteratively refine data collection processes and validation criteria.

Cross-functional Collaboration: Engaging diverse stakeholders, including data engineers, domain experts, and end-users, to ensure alignment between data requirements and business objectives.

Documentation and Auditing: Documenting validation procedures, assumptions, and outcomes to facilitate reproducibility, auditability, and regulatory compliance.

Training and Education: Providing comprehensive training programs to enhance data literacy and cultivate a culture of data stewardship across the organization.

Data collection and validation constitute indispensable pillars of data error management, laying the groundwork for reliable analyses, insights, and decision-making. By embracing systematic approaches, leveraging advanced technologies, and fostering a culture of data quality, organizations can mitigate errors, enhance data integrity, and unlock the full potential of their data assets.

Analysis of Errors and Discrepancies

In the realm of data analysis, errors and discrepancies can introduce significant challenges, potentially leading to inaccurate insights, flawed conclusions, and misguided decisions. Understanding the types, causes, and implications of these errors is essential for effective error management and data quality enhancement. This section delves into the nuanced analysis of errors and discrepancies in data, offering insights into their origins, manifestations, and remediation strategies.

Types of Errors and Discrepancies:

Data errors and discrepancies can manifest in various forms, each with distinct characteristics and implications. Some common types include:

Data Entry Errors: Mistakes introduced during manual data entry processes, such as typographical errors, transcription errors, or duplication of entries.

Systematic Bias: Biases inherent in data collection methodologies, such as sampling bias, selection bias, or response bias, lead to skewed representations of the population.

Outliers and Anomalies: Data points that deviate significantly from the norm, potentially indicating measurement errors, data corruption, or rare events.

Inconsistencies: Discrepancies between related data fields or across data sources, arising from inconsistencies in data formats, definitions, or semantics.

Missing Values: Absence of data in certain records or fields, either due to data collection errors, non-response, or data processing failures.

Causes and Impacts:

The causes of data errors and discrepancies are multifaceted, stemming from diverse sources across the data lifecycle. These include:

Human Factors: Human error in data entry, validation, or interpretation processes, is influenced by factors such as fatigue, inattention, or lack of training.

Technological Limitations: System glitches, hardware failures, software bugs, or compatibility issues that compromise data integrity during collection, storage, or transmission.

Sampling and Measurement Biases: Systematic errors introduced by flawed sampling designs, measurement instruments, or data collection protocols, lead to distorted results.

Process Inefficiencies: Inadequate quality control measures, insufficient validation procedures, or fragmented data governance frameworks that exacerbate error propagation.

External Factors: Environmental factors, market dynamics, regulatory changes, or unforeseen events that introduce unpredictability and volatility into the data.

The impacts of data errors and discrepancies can be far-reaching, affecting decision-making, operational efficiency, and stakeholder trust. These include:

Misguided Decisions: Erroneous insights leading to suboptimal strategies, resource allocation, or risk management decisions.

Reputational Damage: Loss of credibility, trust, and stakeholder confidence due to perceived incompetence or negligence in data management.

Financial Losses: Monetary losses stemming from erroneous transactions, miscalculations, or compliance violations resulting from inaccurate data.

Regulatory Non-Compliance: Legal repercussions, fines, or sanctions imposed for failing to adhere to data privacy, security, or reporting regulations.

Opportunity Costs: Missed opportunities for innovation, growth, or competitive advantage due to reliance on flawed or incomplete data.

Remediation Strategies:

Addressing data errors and discrepancies requires a multifaceted approach that combines proactive prevention measures with reactive remediation strategies.

Key remediation strategies include:

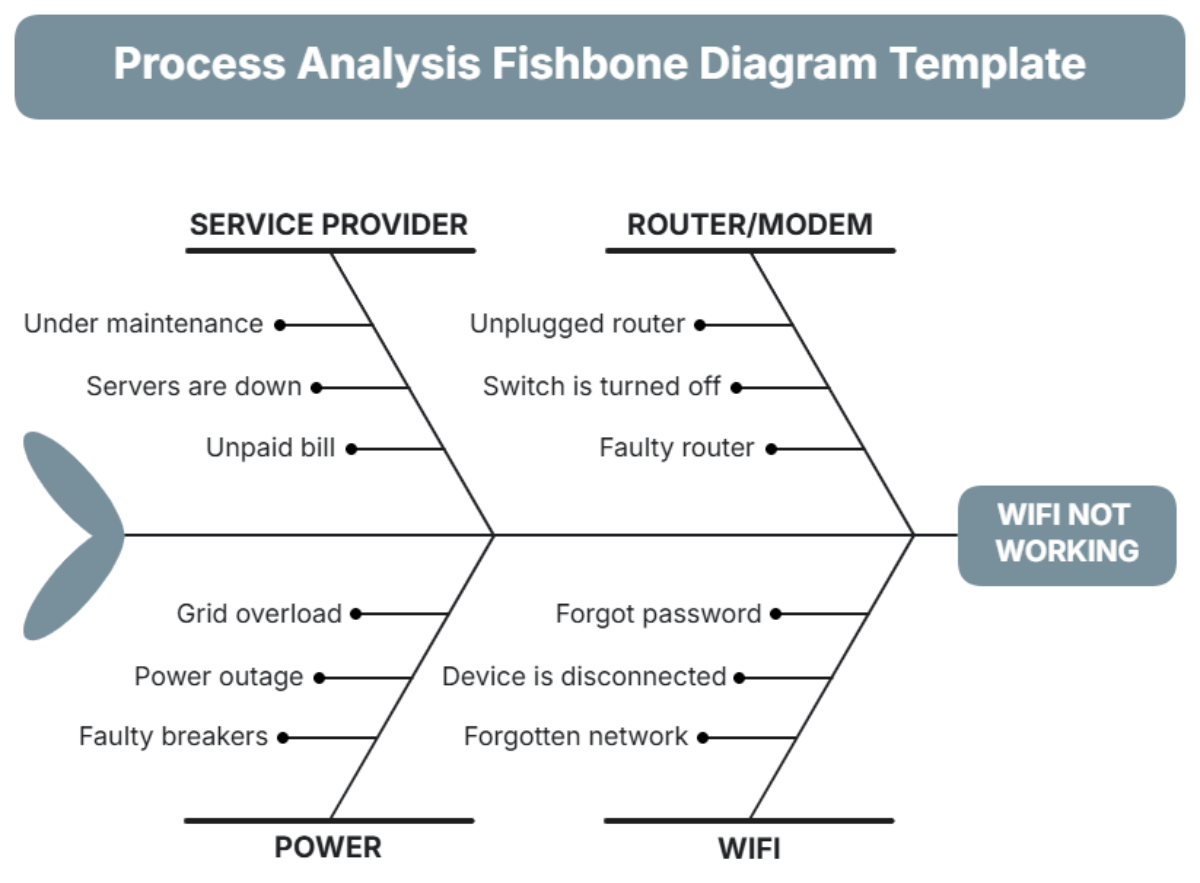

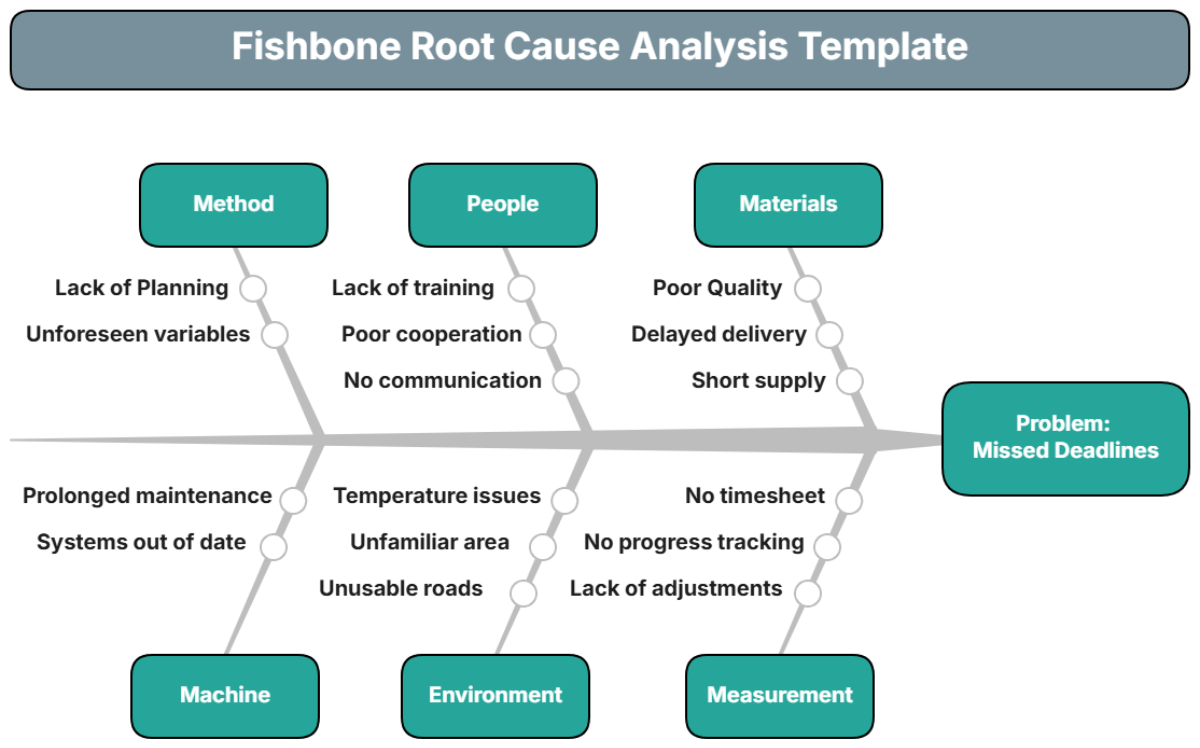

Root Cause Analysis: Identifying the underlying causes of errors through systematic investigation and analysis of data workflows, processes, and controls.

Data Cleaning and Imputation: Implementing data cleansing techniques, such as outlier detection, deduplication, and missing value imputation, to rectify errors and enhance data quality.

Process Automation: Leveraging automation tools and algorithms to streamline data validation, error detection, and correction processes, reducing manual intervention and human error.

Quality Assurance Frameworks: Establishing robust quality assurance frameworks, including validation protocols, data governance policies, and audit trails, to enforce data quality standards and ensure accountability.

Continuous Improvement: Instituting a culture of continuous improvement, feedback, and learning to iteratively refine data management practices, address emerging challenges, and adapt to evolving requirements.

The analysis of errors and discrepancies in data is essential for maintaining data integrity, reliability, and trustworthiness. By understanding the types, causes, and impacts of data errors, organizations can implement proactive measures and remediation strategies to mitigate risks, enhance data quality, and derive meaningful insights that drive informed decision-making and sustainable business outcomes.

Impact Analysis

Financial Impact:

Data errors can have direct financial consequences, affecting revenue, costs, and profitability in several ways:

Transaction Errors: Inaccurate data in financial transactions can result in billing errors, payment discrepancies, and financial losses due to incorrect invoicing or overpayments.

Investment Decisions: Erroneous data used for investment analysis or financial forecasting can lead to misguided investment decisions, portfolio underperformance, or losses.

Compliance Costs: Non-compliance with regulatory reporting requirements due to data errors may result in fines, penalties, or legal expenses.

Operational Inefficiencies: Inefficient processes resulting from data errors, such as inventory discrepancies or supply chain disruptions, can increase operational costs and reduce productivity.

Reputational Impact:

The trust and confidence of stakeholders, including customers, partners, and investors, can be eroded by data errors, leading to reputational damage:

Customer Trust: Inaccurate or incomplete customer data can undermine trust, satisfaction, and loyalty, impacting customer retention and brand reputation.

Investor Confidence: Misleading financial reports or erroneous performance metrics can diminish investor confidence, affecting stock prices and market perception.

Regulatory Compliance: Publicized instances of regulatory non-compliance due to data errors can tarnish the organization's reputation and credibility with regulators and the public.

Media Scrutiny: Negative publicity resulting from data breaches or high-profile errors can damage the organization's image and brand equity, leading to long-term reputational harm.

Operational Impact:

Data errors can disrupt organizational processes, workflows, and decision-making, resulting in operational inefficiencies and suboptimal performance:

Decision-Making Delays: Inaccurate or inconsistent data can impede timely decision-making, leading to missed opportunities or reactive rather than proactive responses to market dynamics.

Resource Allocation: Misallocation of resources based on flawed data analysis can lead to inefficiencies, missed revenue opportunities, or overinvestment in underperforming initiatives.

Customer Experience: Poor data quality can result in subpar customer service experiences, such as billing errors, delivery delays, or incorrect product recommendations, impacting customer satisfaction and loyalty.

Employee Morale: Frustration and demotivation among employees tasked with correcting data errors or dealing with the consequences of flawed analyses can lead to decreased morale and productivity.

Strategic Impact:

Data errors can have broader strategic implications, affecting the organization's competitiveness, innovation capabilities, and long-term viability:

Competitive Disadvantage: Inaccurate market data or flawed competitor analysis can lead to strategic missteps, loss of market share, or missed opportunities for differentiation.

Innovation Constraints: Data errors can inhibit data-driven innovation initiatives, such as predictive analytics or machine learning applications, limiting the organization's ability to capitalize on emerging trends or customer insights.

Strategic Planning: Misguided strategic decisions based on flawed data can undermine the organization's long-term growth trajectory, hindering agility and adaptability in a rapidly evolving business landscape.

Organizational Resilience: Failure to address data errors effectively can weaken the organization's resilience to external shocks, such as economic downturns or regulatory changes, amplifying risks and vulnerabilities.

Recommendations and Future Protocol

Building on the insights gleaned from the analysis of data errors and their impacts, this section outlines actionable recommendations and future protocols for organizations to enhance their data error management practices. By implementing these recommendations, organizations can mitigate risks, improve data quality, and foster a culture of continuous improvement in data governance and stewardship.

Establish Clear Data Governance Frameworks:

Define roles, responsibilities, and accountability for data quality management across the organization.

Establish data governance policies, standards, and procedures to ensure consistency and compliance.

Implement mechanisms for ongoing monitoring, enforcement, and review of data governance practices.

Invest in Data Quality Assurance Tools and Technologies:

Deploy data quality management tools and software solutions to automate error detection, cleansing, and validation processes.

Leverage advanced analytics and machine learning algorithms to identify patterns, anomalies, and outliers indicative of data errors.

Integrate data quality metrics and monitoring dashboards into existing data management systems for real-time visibility and actionable insights.

Enhance Data Collection and Validation Processes:

Standardize data collection methodologies, formats, and protocols to improve consistency and comparability.

Implement robust validation checks and controls at each stage of the data lifecycle to detect and prevent errors proactively.

Conduct periodic audits and validation exercises to assess data quality, identify areas for improvement, and refine validation criteria.

Promote Data Literacy and Training Programs:

Provide comprehensive training programs and resources to enhance data literacy and awareness among employees at all levels.

Offer specialized training in data validation techniques, error detection, and resolution strategies to empower data stewards and analysts.

Foster a culture of continuous learning and knowledge sharing through workshops, seminars, and peer-to-peer mentoring initiatives.

Implement Cross-Functional Collaboration Mechanisms:

Facilitate collaboration and communication between data management teams, domain experts, and end-users to align data requirements with business objectives.

Establish cross-functional data governance committees or working groups to address complex data quality challenges and drive consensus on remediation strategies.

Encourage feedback loops and knowledge sharing across departments to facilitate a holistic approach to data error management.

Embrace Agile and Iterative Improvement Approaches:

Adopt agile methodologies and iterative improvement cycles to incrementally enhance data quality and error management processes.

Prioritize high-impact data errors and discrepancies based on their potential consequences and address them iteratively through targeted interventions.

Continuously monitor and evaluate the effectiveness of data error management initiatives, soliciting feedback from stakeholders and adapting strategies as needed.

Stay Abreast of Emerging Technologies and Best Practices:

Keep abreast of emerging trends, technologies, and best practices in data quality management through industry forums, conferences, and professional networks.

Experiment with innovative approaches such as blockchain-based data validation, decentralized data governance models, or AI-powered anomaly detection to stay ahead of evolving challenges.

Foster partnerships with academic institutions, research organizations, and technology vendors to explore cutting-edge solutions and co-create future-proof data error management protocols.

By implementing these recommendations and future protocols for data error management, organizations can fortify their data infrastructure, mitigate risks, and unlock the full potential of their data assets. By prioritizing data quality, fostering collaboration, and embracing a culture of continuous improvement, organizations can navigate the complexities of data error management with confidence, resilience, and agility in an increasingly data-driven world.

Conclusion

In conclusion, effective data error management is paramount for ensuring the reliability, accuracy, and trustworthiness of data-driven decision-making processes. By comprehensively understanding the types, causes, and impacts of data errors and discrepancies, organizations can prioritize mitigation efforts effectively. Through the implementation of robust data governance frameworks, investment in advanced data quality assurance tools, enhancement of data collection and validation processes, promotion of data literacy, and facilitation of cross-functional collaboration, organizations can mitigate risks and enhance data quality. Embracing agile methodologies, fostering a culture of continuous improvement, and staying abreast of emerging technologies and best practices are crucial for navigating the evolving landscape of data error management. Ultimately, by prioritizing data quality and error management, organizations can unlock the full potential of their data assets, drive innovation, and achieve sustainable business success.