Explanatory Research in Machine Learning

Prepared by: [Your Name]

Date: [Date]

1. Introduction

As machine learning models become more integral to decision-making processes across various industries, their interpretability and explainability have gained significant importance. This research aims to explore the methodologies used to explain machine learning models, providing insights into their predictive mechanisms and the influence of various features.

2. Literature Review

The literature review summarizes current techniques and research on model interpretability:

Historical Perspectives: Early methods of model interpretability included basic visualization tools and statistical summaries. Over time, these techniques have evolved to incorporate more sophisticated algorithms designed to handle complex models.

Current Techniques: Modern approaches such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) have become standard tools for explaining machine learning models. SHAP values provide a unified measure of feature importance, while LIME offers local explanations for individual predictions.

Challenges and Limitations: Despite advancements, challenges remain in scaling explanation techniques for large models, addressing high-dimensional data, and balancing model performance with interpretability.

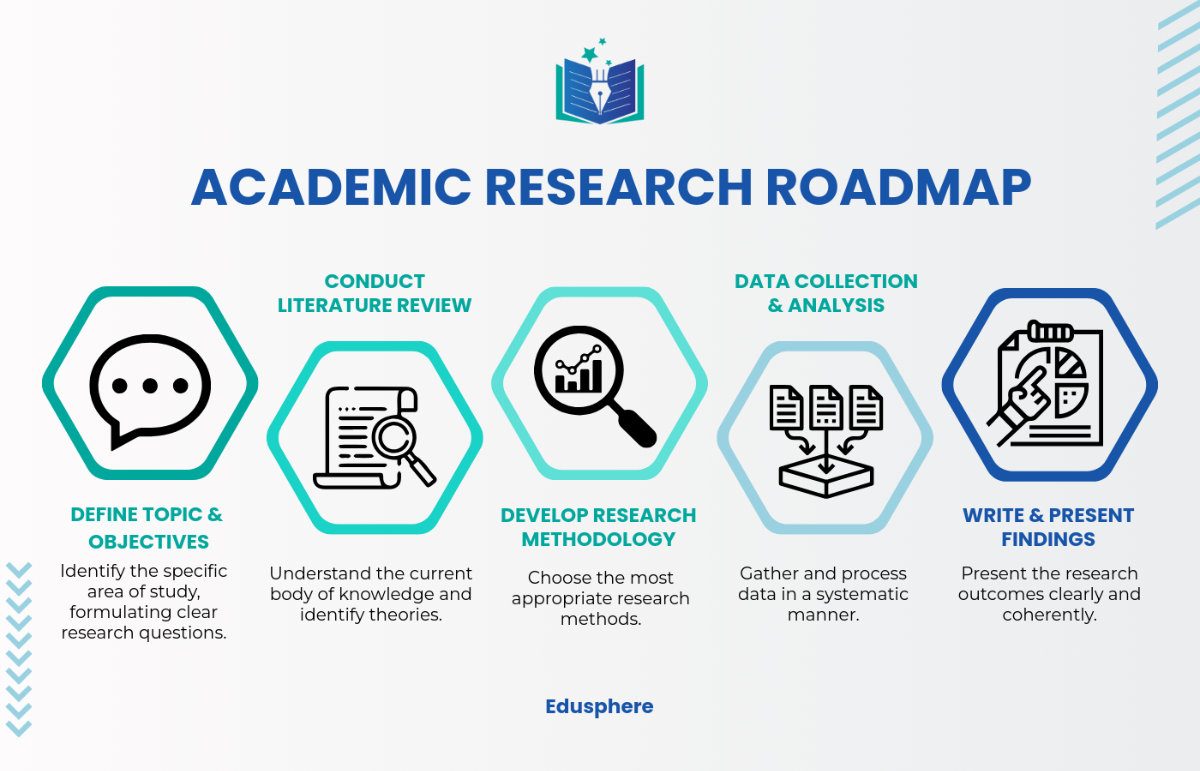

3. Methodology

The methodology section details the approach used in this research:

Data Collection: The study utilized diverse datasets from sectors, including finance (credit scoring), healthcare (patient diagnostics), and retail (customer behavior). These datasets were selected to cover a broad range of applications and model complexities.

Model Selection: A variety of models was chosen for analysis, including logistic regression, random forests, and gradient-boosted machines. Each model was selected based on its relevance and complexity.

Explanation Techniques: Techniques such as SHAP and LIME were employed to analyze feature importance and provide explanations for the model predictions. Additional tools as partial dependence plots and feature interaction graphs, were used to visualize the impact of unique features.

4. Results and Analysis

The results section presents key findings:

Feature Importance: SHAP analysis showed that in a credit scoring model, features such as payment history and income were most influential. In healthcare models, features like age and previous diagnoses were critical in determining predictions.

Model Behavior: Decision trees offered straightforward explanations, while more complex models like gradient-boosted machines required advanced techniques for interpretability. For instance, SHAP values helped elucidate how feature interactions affected predictions in these more complex models.

Case Studies: Applied explanation techniques to a credit scoring model and a medical diagnosis model. The research demonstrated that SHAP effectively highlighted feature importance in both cases, while LIME provided useful local explanations for individual predictions.

5. Discussion

The discussion interprets the results and their implications:

Implications for Model Transparency: Enhancing model transparency through advanced explanation techniques fosters trust and understanding among stakeholders. This is particularly important in sectors where decisions have significant effects, such as finance and healthcare.

Impact on Stakeholders: Improved explanations assist stakeholders in understanding how decisions are made, which is crucial for regulatory compliance and ethical considerations. Clear insights into model behavior can also aid in troubleshooting and refining models.

Ethical Considerations: Ensuring that machine learning models are interpretable helps address ethical concerns related to bias and fairness. Transparent models contribute to more equitable decision-making processes.

6. Conclusion

The research concludes with a summary and recommendations:

Summary of Findings: SHAP and LIME are effective tools for enhancing model interpretability. These methods provide valuable insights into feature importance and prediction mechanisms.

Future Research Directions: Future research should focus on developing scalable explanation techniques for large and high-dimensional models and exploring ways to balance interpretability with model accuracy.

Practical Recommendations: Organizations should integrate explanation methods into their machine learning workflows to enhance transparency and ensure that models meet ethical standards.

7. References

Ribeiro, M.T., Singh, S., & Guestrin, C. (2051). "Why Should I Trust You?" Explaining the Predictions of Any Classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.

Lundberg, S.M., & Lee, S.I. (2052). A Unified Approach to Interpreting Model Predictions. Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS).

Caruana, R., Gehrke, J., Koch, P., & Koch, P. (2050). Intelligible Models for Health Care: Predicting Pneumonia Risk and Hospital 30-Day Readmission. Proceedings of the 21st ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.