Free Technical Incident Report

Company: [YOUR COMPANY NAME]

Reported by: [YOUR NAME]

I. Incident Overview

1.1 Description of Incident

On the 3rd of October, 2063, at approximately 2:30 hours, a critical failure occurred within the primary data cluster. The incident resulted in a total outage of our primary service platform. The initial investigation suggests a hardware malfunction that triggered a cascading effect, leading to widespread system unavailability.

1.2 Impact Analysis

The outage affected all users globally, leading to a complete halt in transactional processes and administrative functions. The incident lasted for a duration of 2 hours, during which time users were unable to access their accounts, and all ongoing transactions were disrupted.

Impact Area | Details |

|---|---|

User Accessibility | All users experienced login failures. |

Transactional Processes | All ongoing and new transactions were halted. |

Administrative Functions | System administrators were unable to access backend tools. |

II. Root Cause Analysis

2.1 Initial Findings

Preliminary diagnostics indicated a hardware malfunction in Node-45 of Server Cluster-2. The malfunction was identified as a failing RAID controller, which resulted in data corruption and subsequent system crashes across dependent nodes.

2.2 Detailed Investigation

The detailed investigation involved multiple steps:

Conducting a full hardware diagnostic on Node-45.

Analyzing server logs to trace the failure timeline.

Reviewing the RAID controller's performance history.

Cross-referencing with past incidents to identify patterns.

III. Resolution and Recovery

3.1 Immediate Actions Taken

Upon identifying the faulty RAID controller, the following immediate actions were implemented:

Isolated Node-45 to prevent further data corruption.

Engaged backup nodes to restore minimal services.

Informed the user base about the ongoing issue and estimated downtime.

3.2 Long-Term Solutions

To mitigate the recurrence of such incidents, the following long-term solutions have been proposed:

Upgrading RAID controllers across all clusters.

Implementing real-time hardware monitoring tools to detect anomalies early.

Establishing a more robust failover mechanism to ensure service continuity.

Regularly updating and stress-testing backup systems.

Additionally, a review of our incident response protocol will be conducted to enhance our operational readiness and efficiency during such critical events.

- 100% Customizable, free editor

- Access 1 Million+ Templates, photo’s & graphics

- Download or share as a template

- Click and replace photos, graphics, text, backgrounds

- Resize, crop, AI write & more

- Access advanced editor

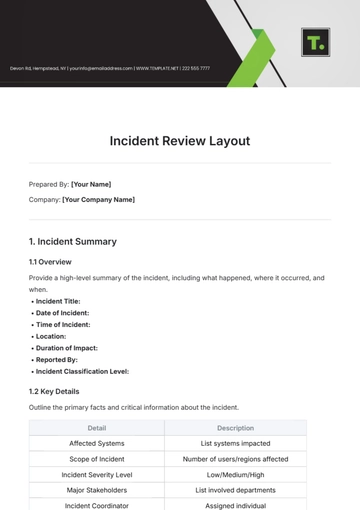

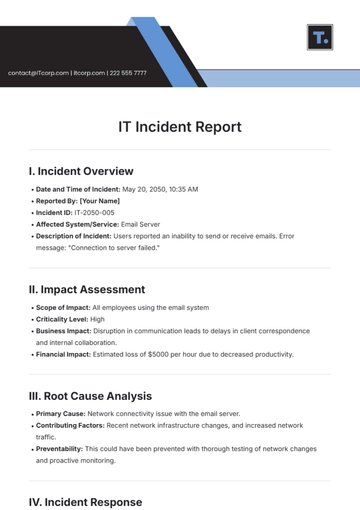

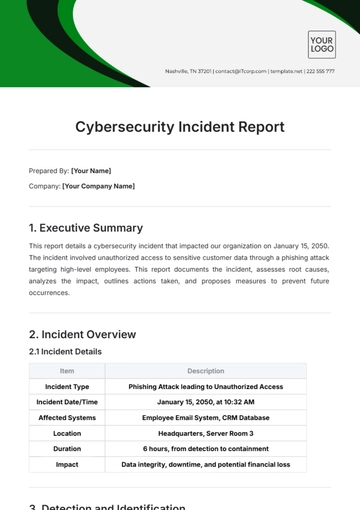

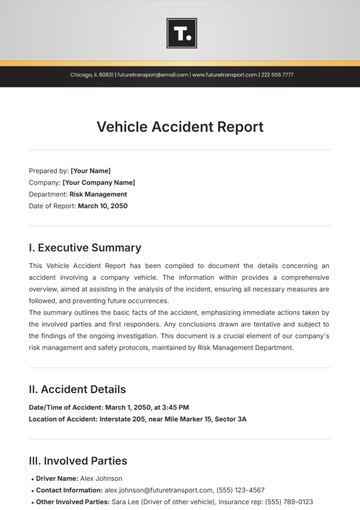

Ensure that every technical incident is properly documented with our Technical Incident Report Template, available on Template.net. This template is designed to capture all essential details related to incidents, ensuring thorough documentation. Customizable for different incident types and editable in our Ai Editor Tool, it guarantees a professional and organized reporting process.

You may also like

- Sales Report

- Daily Report

- Project Report

- Business Report

- Weekly Report

- Incident Report

- Annual Report

- Report Layout

- Report Design

- Progress Report

- Marketing Report

- Company Report

- Monthly Report

- Audit Report

- Status Report

- School Report

- Reports Hr

- Management Report

- Project Status Report

- Handover Report

- Health And Safety Report

- Restaurant Report

- Construction Report

- Research Report

- Evaluation Report

- Investigation Report

- Employee Report

- Advertising Report

- Weekly Status Report

- Project Management Report

- Finance Report

- Service Report

- Technical Report

- Meeting Report

- Quarterly Report

- Inspection Report

- Medical Report

- Test Report

- Summary Report

- Inventory Report

- Valuation Report

- Operations Report

- Payroll Report

- Training Report

- Job Report

- Case Report

- Performance Report

- Board Report

- Internal Audit Report

- Student Report

- Monthly Management Report

- Small Business Report

- Accident Report

- Call Center Report

- Activity Report

- IT and Software Report

- Internship Report

- Visit Report

- Product Report

- Book Report

- Property Report

- Recruitment Report

- University Report

- Event Report

- SEO Report

- Conference Report

- Narrative Report

- Nursing Home Report

- Preschool Report

- Call Report

- Customer Report

- Employee Incident Report

- Accomplishment Report

- Social Media Report

- Work From Home Report

- Security Report

- Damage Report

- Quality Report

- Internal Report

- Nurse Report

- Real Estate Report

- Hotel Report

- Equipment Report

- Credit Report

- Field Report

- Non Profit Report

- Maintenance Report

- News Report

- Survey Report

- Executive Report

- Law Firm Report

- Advertising Agency Report

- Interior Design Report

- Travel Agency Report

- Stock Report

- Salon Report

- Bug Report

- Workplace Report

- Action Report

- Investor Report

- Cleaning Services Report

- Consulting Report

- Freelancer Report

- Site Visit Report

- Trip Report

- Classroom Observation Report

- Vehicle Report

- Final Report

- Software Report